Explore our Climate and Energy Hub

29/10/2025

Since the “ChatGPT moment” in November 2022, the world has been swept up in the excitement surrounding AI. But as the initial fervour begins to wind down, many people are scratching their heads. Is that all there is? Where’s my productivity gain? We have entered the so-called “trough of disillusionment”.

Some argue that we are, and were always, in a hyped-up AI bubble, while others contend that, despite the massive investment in capital and energy consumption, the speed at which the technology is developing means the benefits are accruing and will eventually be delivered. A third perspective suggests that, even if we are in a bubble, AI is still a nice new tool to have.

At Nous, we believe that it pays to be realistic about technological progress, but also that there are substantial opportunities ahead for organisations seeking to adopt AI responsibly. Even if AI progress were to stall today, those opportunities would remain with the technology we have now.

The Anna Karenina principle: Success and failure in AI adoption

As Leo Tolstoy never wrote, all organisations that successfully adopt AI are alike; each organisation that unsuccessfully adopts AI is unsuccessful in its own way.

The Anna Karenina principle posits that successful endeavours share common traits, whereas failures can occur in myriad ways. This principle is particularly relevant to AI adoption. For an AI initiative to succeed, all key factors must align perfectly. The absence of even one essential element can lead to failure.

Our work with clients reveals this dynamic. Successful AI adopters consistently demonstrate four essential ingredients, while failures stem from myriad causes: cultural resistance, misaligned incentives, inadequate governance, poor change management. The list goes on.

Four ingredients for success

Vision

Vision is often overlooked, or played down as a vague, somewhat nebulous quality, but is vital for motivating staff and driving transformation.

AI adoption requires making deliberate choices on where to focus effort and resources to achieve sustained organisational performance. It must be grounded in the organisation’s broader strategic priorities and clearly define the role AI will play in supporting them.

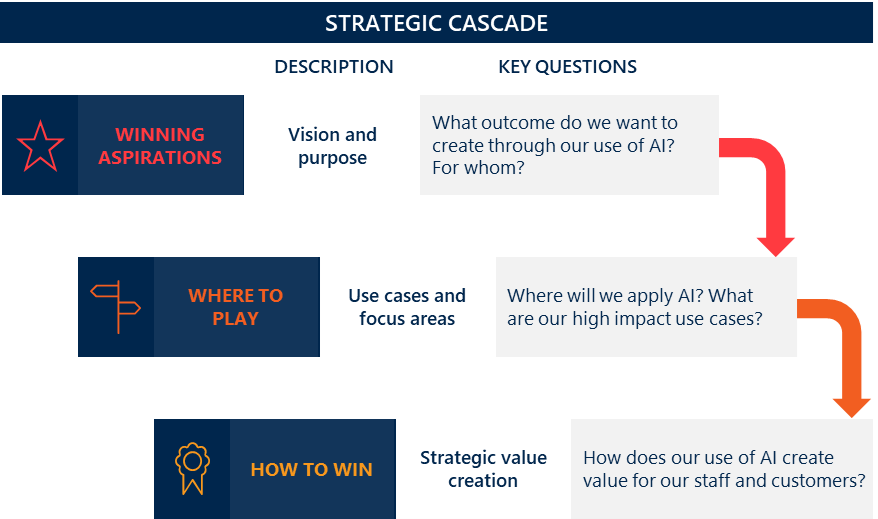

The strategic cascade (Figure 1) offers a structured and effective process to guide decision-making. It helps leaders identify where AI can create the greatest value and ensures that investment and effort are aligned with the organisation’s goals.

Suncorp reported impressive results in FY25: 2.8 million digital customer interactions handled automatically by AI, over 2 million claims summaries produced through the "Single View of Claims" platform, and more than 14,350 hours saved (since October 2024) by "Smart Knowledge". This stemmed from its straightforward, easy to understand vision: “Apply AI where it measurably improves customer experience and efficiency”. This clarity of purpose is crucial for aligning AI initiatives with organisational goals.

Responsible innovation

Unless we consciously adopt AI in responsible, ethical ways, trust in the technology is likely to remain low, increasing risk and nullifying benefits. According to the AI Incidents Database, the number of reported AI-related incidents rose to 233 in 2024; a record high and a 56.4 per cent increase over 2023.

Good governance is critical to supporting innovation while appropriately managing AI-related risks. Good governance must:

- Support strategy: Ensure that AI systems are aligned with the organisational strategy and priorities.

- Reinforce accountability: Set, disseminate and hold teams accountable for using AI within the agreed risk appetites and tolerances.

- Monitor risks: Actively monitor and respond to evolving AI-related risks.

- Drive continuous improvement: Surface institutional lessons and use these to drive better practice.

Operating model

Organisational and individual capability and culture will either enable or stall AI innovations. Organisations must revisit their internal structure, training and development pathways and culture to assess whether it supports their AI initiatives.

ReadyTech, an ASX-listed company, exemplifies the importance of having a flexible, AI-friendly operating model. It reported significant productivity gains, including a 25 per cent increase in coding efficiency, largely thanks to its agile AI squad, which transcends organisational boundaries. By bringing together diverse expertise from across the business, this cross-functional team fosters collaboration and accelerates learning, ensuring that AI solutions are both practical and widely adopted.

Evaluation

Evaluation must occur at multiple levels, from micro-level assessments of AI models and systems to organisational-level evaluations of AI investments. Our work with clients, such as the Australian Public Service, which engaged us to evaluate the world’s largest whole-of-government trial of Microsoft Copilot, underscores the importance of evaluation in identifying success stories and refining AI strategies. Our evaluation found that agencies faced both technical and cultural adoption challenges during the trial. The evaluation also highlighted capability gaps, with participants requiring both tailored training in agency-specific use cases and general generative-AI literacy development.

AI’s potential impact remains significant. By ensuring that all four ingredients are baked into the adoption process from the outset, organisations can navigate AI's complexities and unlock its benefits. The technology exists. The question is whether your organisation has the vision, governance, operating model and evaluation capability to make it work. Flailing wildly is not an option, but standing still while the revolution takes place around us isn’t a very good one, either.

This article is an edited version of a presentation delivered by David Diviny at the CEDA AI Leadership Summit in Brisbane on 21 October 2025.