PROGRESS 2050: Toward a prosperous future for all Australians

06/12/2020

Building confidence and trust that technology is being designed, developed and used for the benefit of people  and that risks are being considered and addressed seems crucial to creating an environment where the community embraces the opportunities presented by emerging technologies. Just how we can build confidence that human and public interest is at the centre of tech decision making was the focus of this session.

and that risks are being considered and addressed seems crucial to creating an environment where the community embraces the opportunities presented by emerging technologies. Just how we can build confidence that human and public interest is at the centre of tech decision making was the focus of this session.

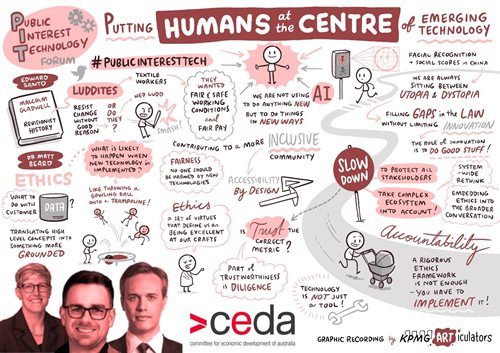

The session kicked off with Ed Santow – Human Rights Commissioner at the Australian Human Rights Commission, who began his comments by reminding us that Luddites were not actually opposed to new technology as is often widely inferred, but rather their concerns were about the impacts of that technology and achieving safe and fair working conditions and pay. Ed argued that instead of living through a technology ‘fever dream’ where we seemingly flip from a focus on the ‘utopian’ benefits of technology to the ‘dystopian harms’ we need to put human rights consistently at the core of regulating emerging technologies like AI.

A strong and consistent theme in Ed’s comments was that AI is not doing anything that has not been done before it is just doing it differently, and that existing laws and regulations, such as those pertaining to anti-discrimination, are relevant and need to be applied. In other words, any debate about “does technology need to be regulated” is a false one.

Ed observed that ethics and ethical frameworks have an important role to play in filling gaps where laws and regulations are silent and rightfully so. In other words, ethics complement laws and regulations.

Dr Matt Beard, who is a Fellow at the Ethics Centre, agreed with this position on ethics working with and not as a rival to law or compliance. In terms of the relevance of ethics in tech decision making, Matt stated simply that “if you don’t have ethical processes, frameworks and safeguards, you are not building good stuff”. He used the example of household appliances, noting that if they don’t keep you safe – think exploding toasters – it doesn’t matter how quickly it was produced or how fancy it is, it is simply not doing its job.

In discussing whether applying ethics was about slowing the pace of tech down, Matt argued that ethics is not about curtailing innovation, but about guiding innovation so that it makes peoples lives better and encapsulates our values. He also noted that speed itself can be an unspoken ‘value’ in the tech development process and failing to call that out means potential trade-offs are not surfaced, decisions tend to reflect default settings and the status quo, and that interactions with an existing complex tech ecosystem are not appropriately considered. Ed simply concluded by saying that “the problem with moving fast and breaking things, is that sometimes you are breaking people.”

While agreeing on the importance of ethics frameworks, both Matt and Ed noted that, like laws and regulations, ethics frameworks make no difference if they are not rigorously applied and effectively ‘enforced’.

An important priority which emerged from this conversation is the need to build capabilities in ethical thinking and decision making and for a system-wide rethink of how ethics training is being delivered and undertaken. The point made by Matt is that we need to start from the ground up, and rethink curriculum, “ethics and ethical decision making should not be one module at the end of university course, or one hour in a 13 week discussion of machine learning and data ethics, for instance, but an entire conversation about considerations and decision making is embedded through all learning.”

Not surprisingly a key theme throughout this conversation was ‘think should before could’.

Watch the discussion:

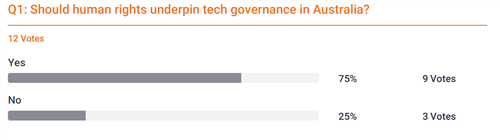

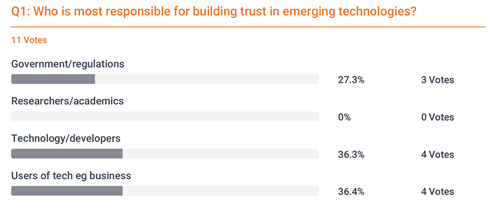

Poll questions

KPMG live-scribe

CEDA's PIT Forum was supported by our Foundation Partners: